Small object detection by Slicing Aided Hyper Inference (SAHI)

In surveillance applications, detecting tiny items and objects that are far away in the scene is practically very difficult. Because such things are represented by a limited number of pixels in the image, traditional detectors have a tough time detecting them. So, in this article, we will look at how current SOTA models fail to recognize objects at the far end, as well as a strategy presented by Fatih Akyon et al. to address this problem called Slicing Aided Hyper Inference (SAHI). Below are major points listed that are to be discussed in this article.

Table of contents

- The small object detection problem

- How SAHI helps to detect small objects

- Implementing the method

Let’s start the discussion by understanding the problem of small object detection.

The small object detection problem

Object detection is a task that involves bounding boxes and classifying them into categories to locate all positions of objects of interest in an input. Several ways have been proposed to accomplish this goal, ranging from traditional methodologies to deep learning-based alternatives.

Object detection approaches are divided into two categories: two-stage approaches based on region proposal algorithms and real-time and unified networks or one-stage approaches based on regression or classification.

Adoption of deep learning architectures in this sector has resulted in very accurate approaches like Faster R-CNN and RetinaNet, which have been further refined as Cascade R-CNN, VarifocalNet, and variants. These new detectors are all trained and validated on well-known datasets like ImageNet, Pascal VOC12, and MS COCO.

These datasets generally consist of low-resolution photos (640 480 pixels) with huge objects and high pixel coverage (on average, 60 percent of the image height). While the trained models perform well on those sorts of input data, they exhibit much poorer accuracy on small item detection tasks in high-resolution photos captured by high-end drones and surveillance cameras.

Small object detection is thus a difficult task in computer vision because, in addition to the small representations of objects, the diversity of input images makes the task more difficult. For example, an image can have different resolutions; if the resolution is low, the detector may have difficulty detecting small objects.

How SAHI helps to detect small objects

To address the small object detection difficulty, Fatih Akyon et al. presented Slicing Aided Hyper Inference (SAHI), an open-source solution that provides a generic slicing aided inference and fine-tuning process for small object recognition. During the fine-tuning and inference stages, a slicing-based architecture is used.

Splitting the input photos into overlapping slices results in smaller pixel regions when compared to the images fed into the network. The proposed technique is generic in that it can be used on any existing object detector without needing to be fine-tuned. The suggested strategy was tested using the models Detectron2, MMDetection, and YOLOv5.

As previously discussed, the models pre-trained using these datasets provide very successful detection performance for similar inputs. They produce significantly lower accuracy on small object detection tasks in high-resolution images generated by high-end drone and surveillance cameras, on the other hand. To address this problem, the framework augments the dataset by extracting patches from the image fine-tuning dataset.

Each image is sliced into overlapping patches of varying dimensions that are chosen within predefined ranges known as hyper-parameters. Then, during fine-tuning, patches are resized while maintaining the aspect ratio, so that the image width is between 800 and 1333 pixels, resulting in augmentation images with larger relative object sizes than the original image. These images, along with the original images, are utilized during fine-tuning.

During the inference step, the slicing method is also used. In this case, the original query image is cut into a number of overlapping patches. The patches are then resized while the aspect ratio is kept. Following that, each overlapping patch receives its own object detection forward pass. To detect larger objects, an optional full-inference (FI) using the original image can be used. Finally, the overlapping prediction results and, if applicable, the FI results are merged back into their original size.

Now we’ll see practically how it can make a difference.

Implementing the method

In this section, we’ll see how self aided slicing and inference can detect the small object from the images. We’ll compare the original prediction given by the YOLOv5 and SAHI + YOLOv5.

Now, let’s start with installing and importing the dependencies.

# install latest SAHI and YOLOv5 !pip install -U torch sahi yolov5 # import required functions, classes from sahi.utils.yolov5 import download_yolov5s6_model from sahi.model import Yolov5DetectionModel from sahi.utils.cv import read_image from sahi.predict import get_prediction, get_sliced_prediction from IPython.display import Image

Now we’ll quickly load the standard YOLO model and initiate it with the Yolov5DetectionModel method.

# download YOLOV5S6 model

yolov5_model_path = 'yolov5s6.pt'

download_yolov5s6_model(destination_path=yolov5_model_path)

# inference with YOLOv5 only

detection_model = Yolov5DetectionModel(

model_path=yolov5_model_path,

confidence_threshold=0.3,

device="cuda:0")

Let’s get and visualize the predictions. Here we utilize the get_prediction method from the framework which takes an image and detection model. Later we exported our result to the current working directory and visualized the same by using PIL.

result = get_prediction(read_image("/content/mumbai-bridge-1.jpg"), detection_model)

# export the result current working directory

result.export_visuals(export_dir="/")

# show the result which saved as prediction_visual.png

Image("prediction_visual.png")

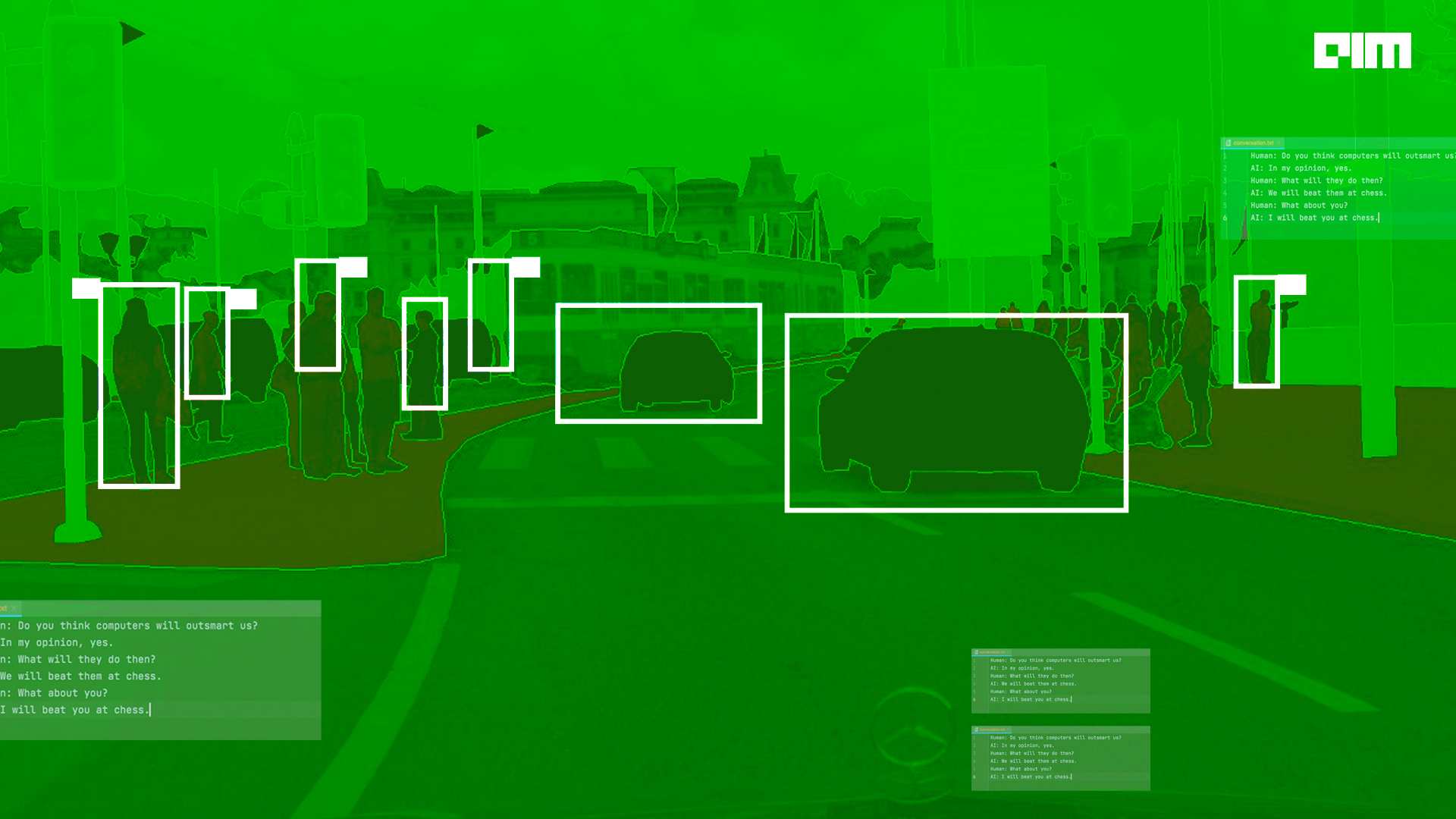

As we can see from the result, the cars at the far end on the flyover have not been captured by the standard YOLO. Now let’s see how plugging SAHI will improve the overall result. Let’s infer the proposed method.

result = get_sliced_prediction(

read_image("/content/mumbai-bridge-1.jpg"),

detection_model,

slice_height = 256,

slice_width = 256,

overlap_height_ratio = 0.2,

overlap_width_ratio = 0.2)

And here is the result.

Isn’t it like magic?

Final words

Through this article, we have discussed object detection. In a more detailed manner, we discussed how standard SOTA models such as YOLO, fast R-CNN, etc fail when there is interest in detecting the small objects which are usually problems for images taken by drones etc. To overcome this, we have discussed a plug-in kind of method called SAHI which enhances the detection for a given image by mainly slicing and iterating the image and above is the result for this method.