Archives for LSTM Network

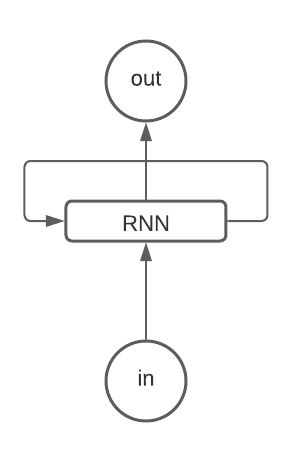

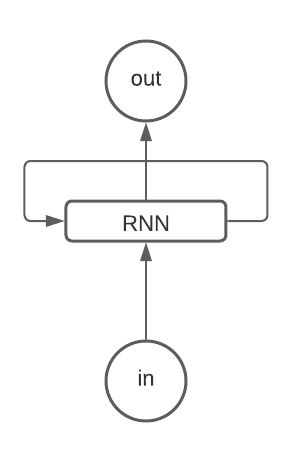

Long Short Term Memory in short LSTM is a special kind of RNN capable of learning long term sequences. They were introduced by Schmidhuber and Hochreiter in 1997. It is explicitly designed to avoid long term dependency problems. Remembering the long sequences for a long period of time is its way of working.

The post LSTM Vs GRU in Recurrent Neural Network: A Comparative Study appeared first on Analytics India Magazine.

Adding Attention layer in any LSTM or Bi-LSTM can improve the performance of the model and also helps in making prediction in a accurate sequence. very helpful in NLP modeling with big data

The post Hands-On Guide to Bi-LSTM With Attention appeared first on Analytics India Magazine.

Bidirectional long-short term memory(Bidirectional LSTM) is the process of making any neural network o have the sequence information in both directions backwards (future to past) or forward(past to future).

The post Complete Guide To Bidirectional LSTM (With Python Codes) appeared first on Analytics India Magazine.

This is the 21st century, and it has been revolutionary for the development of machines so far and enabled us...

The post How To Do Multivariate Time Series Forecasting Using LSTM appeared first on Analytics India Magazine.

Introduced in the 1970s, Hopfield networks were popularised by John Hopfield in 1982. Hopfield networks, for the most part of machine learning history, have been sidelined due to their own shortcomings and introduction of superior architectures such as the Transformers (now used in BERT, etc.). Co-creator of LSTMs, Sepp Hochreiter with a team of researchers,…

The post Is Hopfield Networks All You Need? LSTM Co-Creator Sepp Hochreiter Weighs In appeared first on Analytics India Magazine.

Introduced in the 1970s, Hopfield networks were popularised by John Hopfield in 1982. Hopfield networks, for the most part of machine learning history, have been sidelined due to their own shortcomings and introduction of superior architectures such as the Transformers (now used in BERT, etc.). Co-creator of LSTMs, Sepp Hochreiter with a team of researchers,…

The post Is Hopfield Networks All You Need? LSTM Co-Creator Sepp Hochreiter Weighs In appeared first on Analytics India Magazine.

In this article, we will discuss the Long-Short-Term Memory (LSTM) Recurrent Neural Network, one of the popular deep learning models, used in stock market prediction. In this task, we will fetch the historical data of stock automatically using python libraries and fit the LSTM model on this data to predict the future prices of the stock.

The post Hands-On Guide To LSTM Recurrent Neural Network For Stock Market Prediction appeared first on Analytics India Magazine.

Juergen Schmidhuber There are close to 3.5 billion smartphone users in the world, and if you happen to be one of them, then the chances are high that Juergen Schmidhuber has already touched your life. Be it Apple’s Siri or Amazon’s Alexa, all the top speech and voice assistants work on LSTM or Long Short…

The post Interview With Juergen Schmidhuber appeared first on Analytics India Magazine.

Normal Neural Networks are feedforward neural networks wherein the input data travels only in one direction i.e forward from the input nodes through the hidden layers and finally to the output layer. Recurrent Neural Networks, on the other hand, are a bit complicated. The data travels in cycles through different layers. To put it a…

The post How To Code Your First LSTM Network In Keras appeared first on Analytics India Magazine.