Archives for Model parallelism

The difference between these two approaches maps naturally to the heterogeneity of a typical compute cluster.

The post Automating model parallelism with just one line of code appeared first on Analytics India Magazine.

Model parallelism seemed more apt for DNN models as a bigger number of GPUs was added.

PaLM is not only trained with the much-publicised Pathway system from Google (introduced last year), but it also avoids using pipeline parallelism, a strategy used traditionally for large language models.

22

Apr

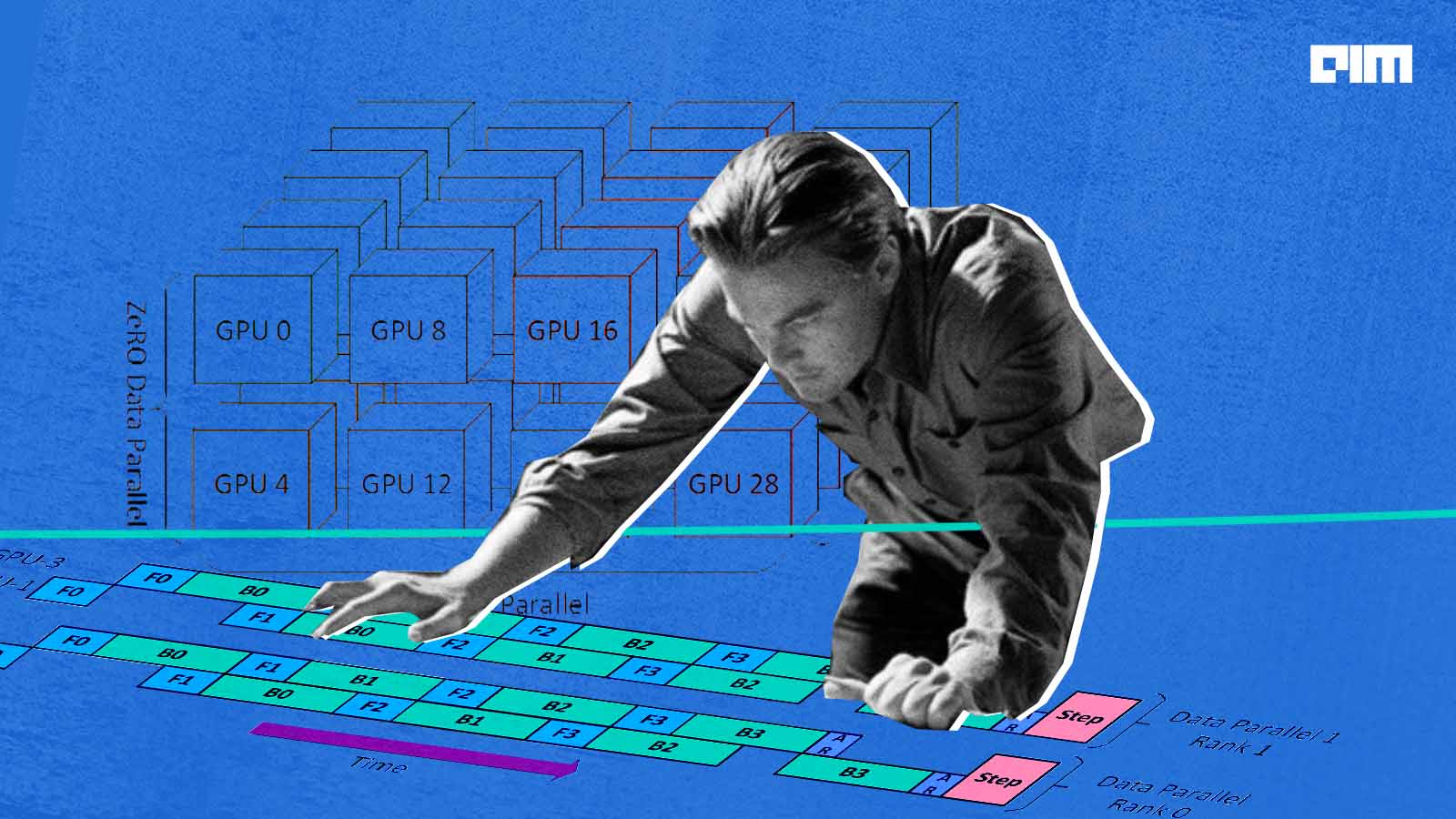

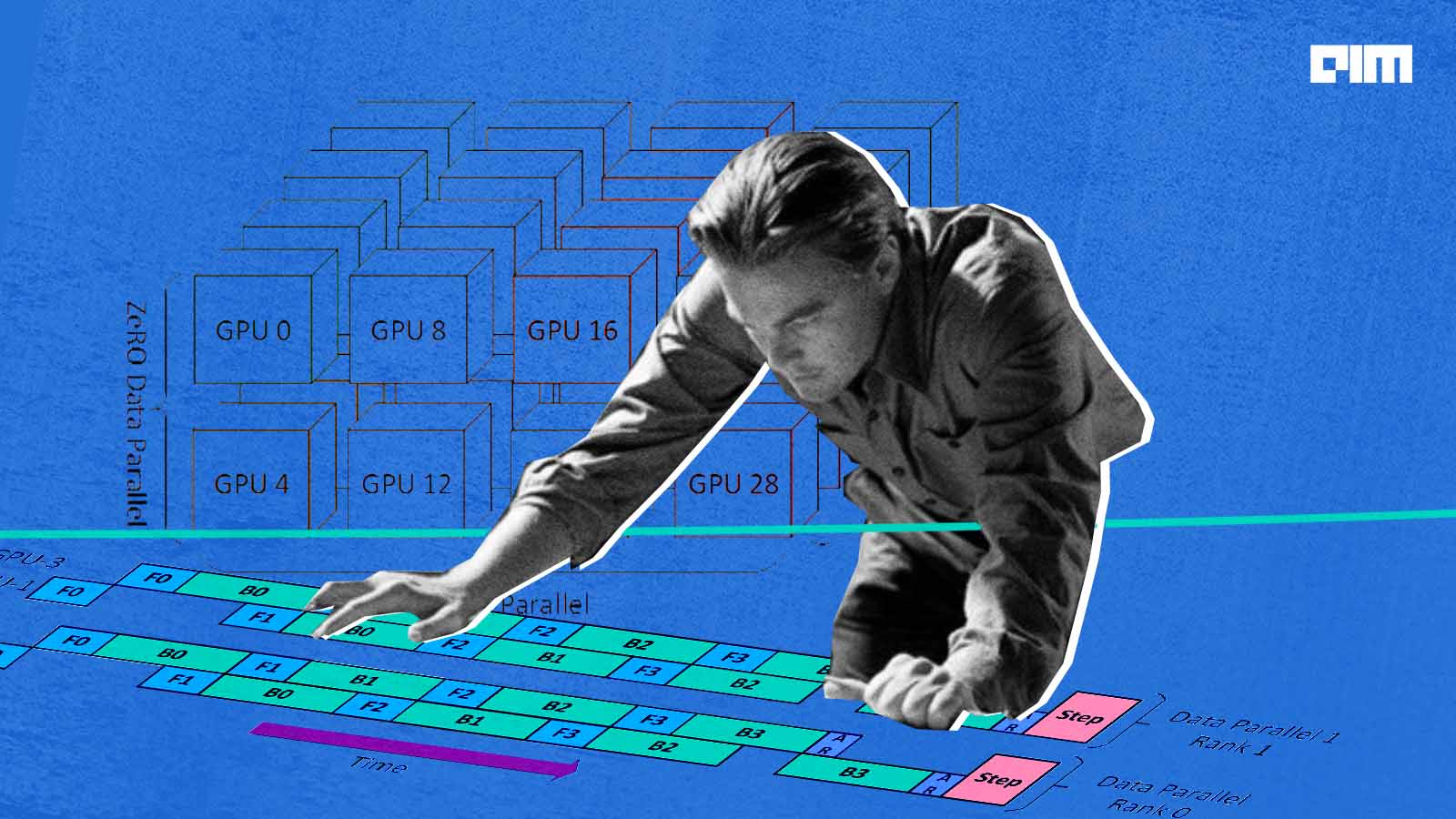

Behind NVIDIA’s Megatron

The team performed training iterations on models with a trillion parameters at 502 petaFLOP/s on 3072 GPUs by combining three techniques.

The post Behind NVIDIA’s Megatron appeared first on Analytics India Magazine.