Archives for word2vec

Creating representations of words is to capture their meaning, semantic relationship, and context of different words; here, different word embedding techniques play a role. A word embedding is an approach used to provide dense vector representation of words that capture some context words about their own.

The post Hands-On Guide To Word Embeddings Using GloVe appeared first on Analytics India Magazine.

In natural language processing, word embedding is a term used to represent words for text analysis,

The post Guide To Word2vec Using Skip Gram Model appeared first on Analytics India Magazine.

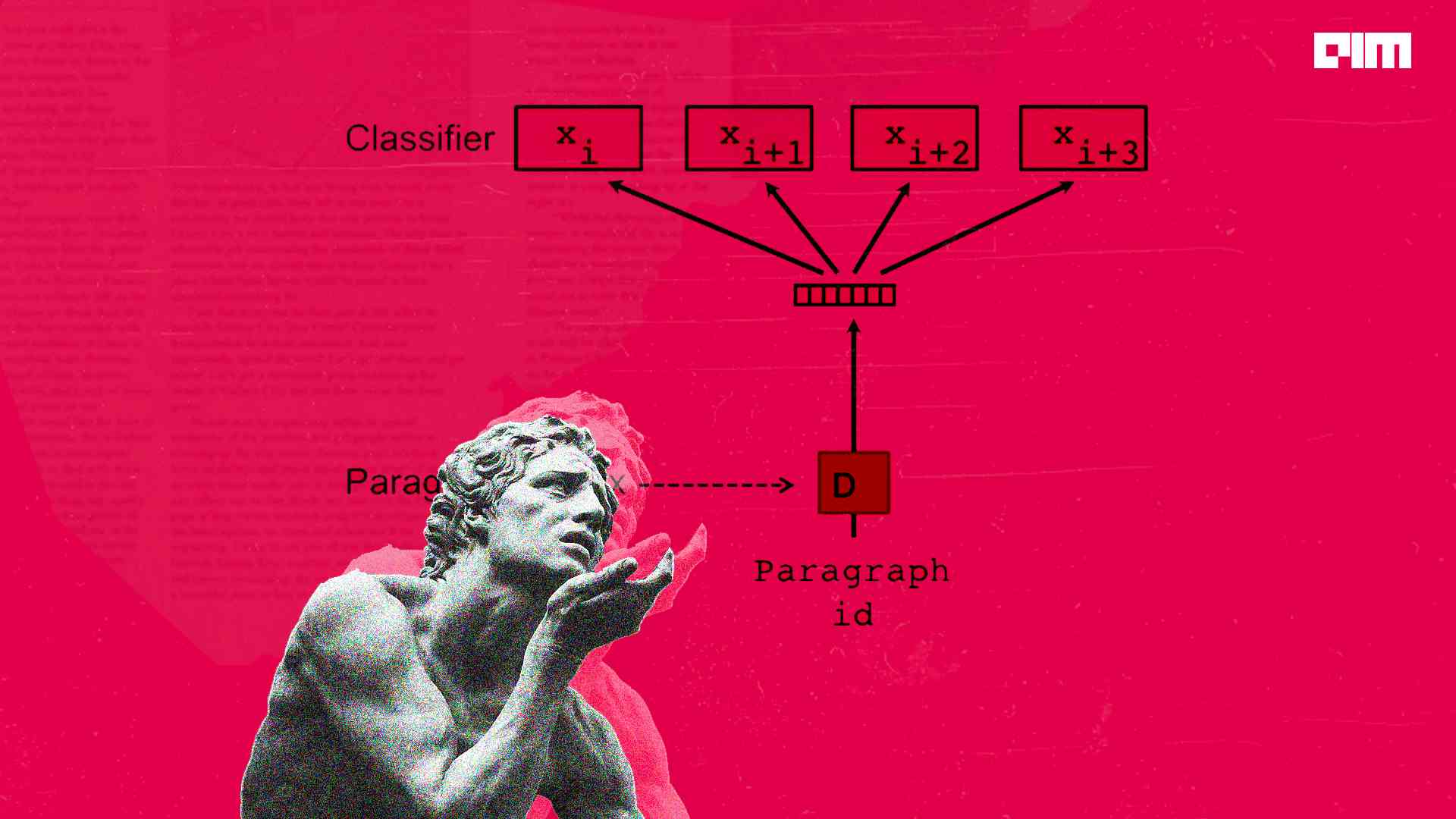

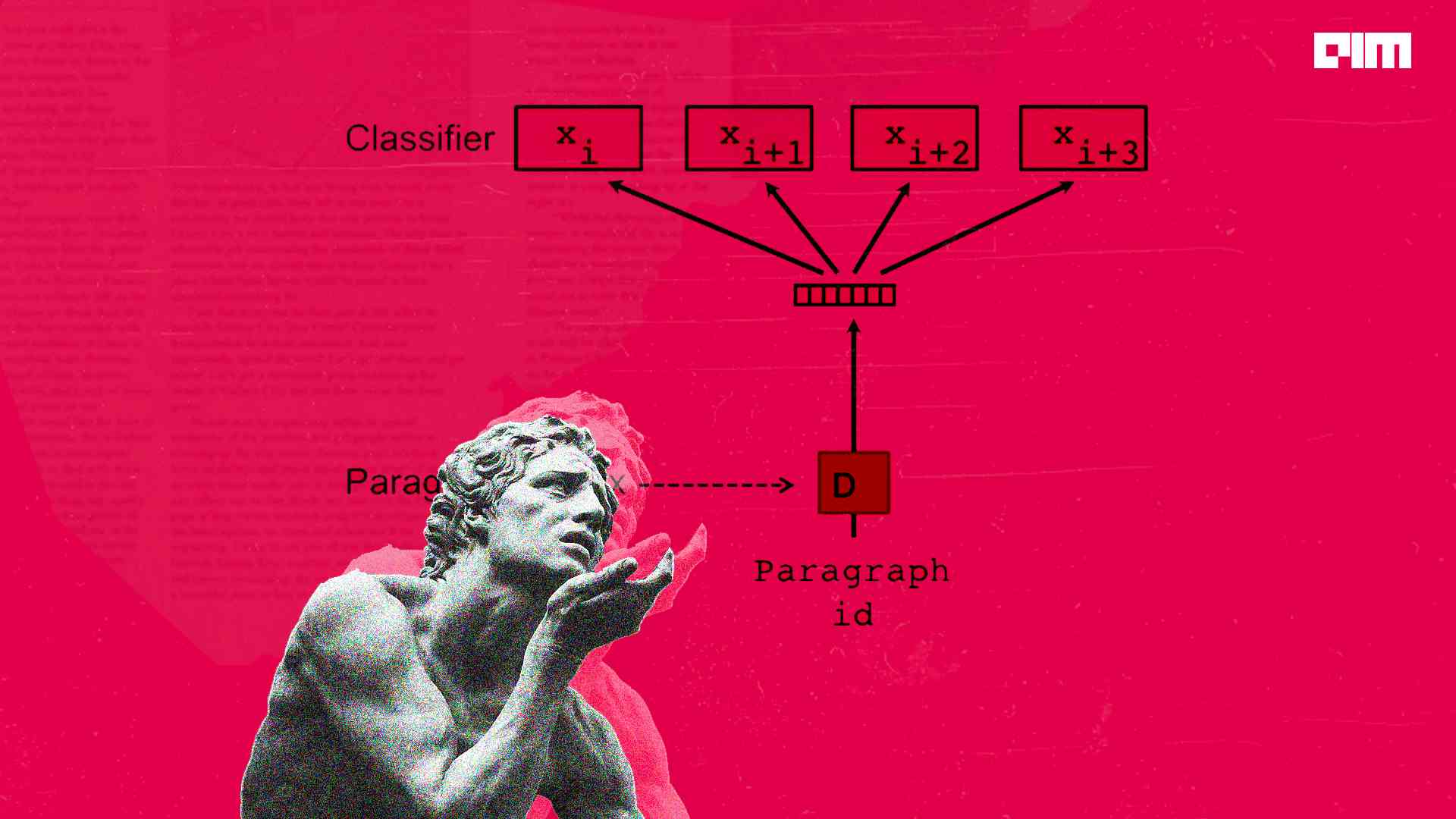

Sense2vec is a neural network model that generates vector space representations of words from large corpora. It is an extension of the infamous word2vec algorithm.Sense2vec creates embeddings for ”senses” rather than tokens of words.

The post Guide to Sense2vec – Contextually Keyed Word Vectors for NLP appeared first on Analytics India Magazine.

Facebook has been doing a lot in the field of natural language processing (NLP). The tech giant has achieved remarkable breakthroughs in natural language understanding and language translation in recent years. Now researchers at Facebook are implementing semi-supervised and self-supervised learning techniques to leverage unlabelled data which helps in improving the performance of the machine…

The post Facebook Introduces New Model For Word Embeddings Which Are Resilient To Misspellings appeared first on Analytics India Magazine.

It is genius because it can neither be decoded nor mimicked. It can be remade, copied but can never again be unique. To laymen, the works of great composers falls somewhere between their mood swings and status signaling. But one cannot, in all their seriousness, comprehend what went in to writing the “Requiem for a…

The post Can Word2Vec Model Spill The Secrets Of Mozart’s Music? appeared first on Analytics India Magazine.