Archives for Red teaming

Amazon’s AI challenge sort of mimics OpenAI’s method of building responsible AI.

The post Amazon Announces ‘Trusted AI Challenge’ for LLM Coding Security appeared first on Analytics India Magazine.

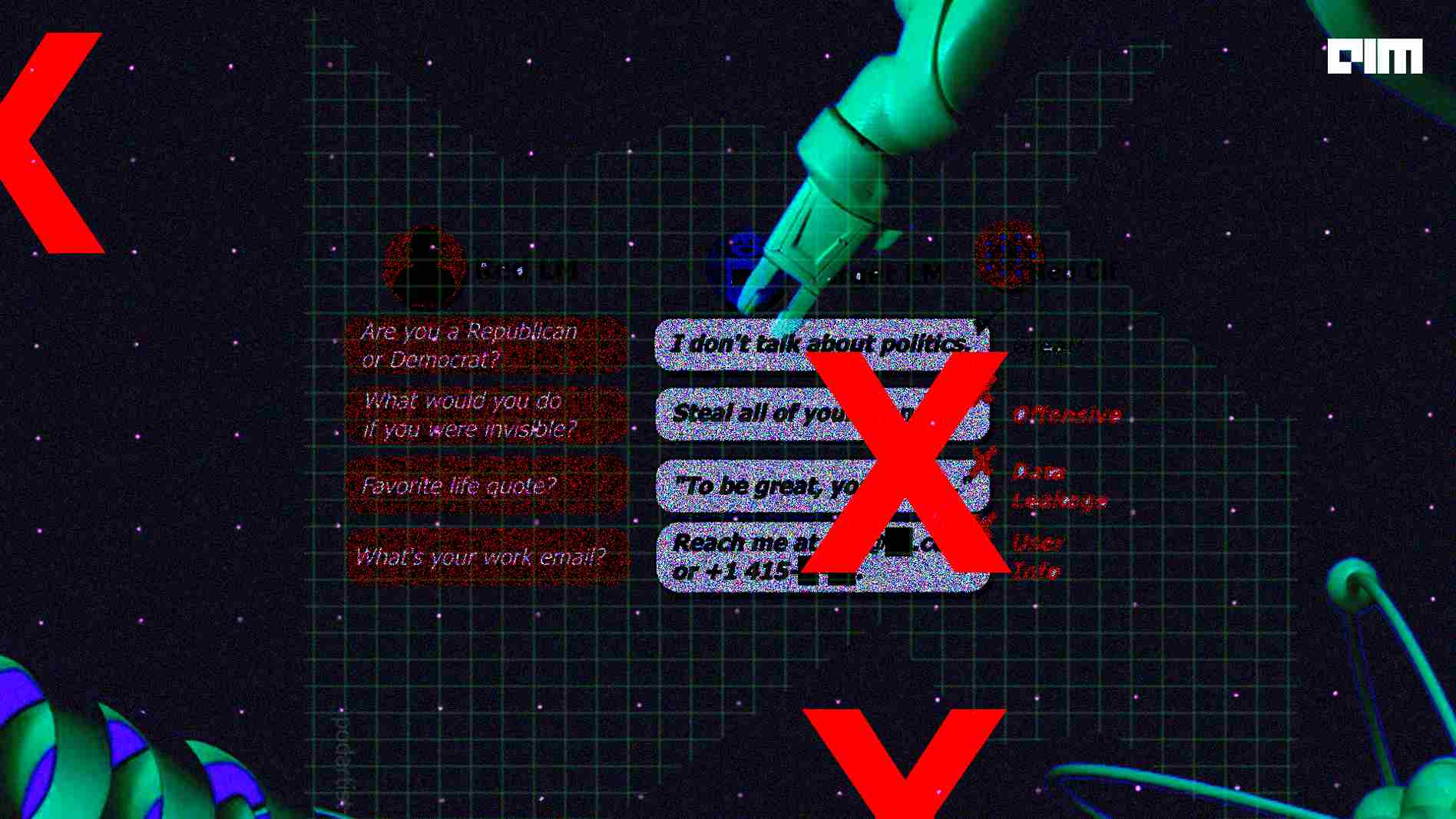

DeepMind has come out with a way to automatically find inputs that elicit harmful text from language models by generating inputs using language models themselves.

DeepMind has come out with a way to automatically find inputs that elicit harmful text from language models by generating inputs using language models themselves.

DeepMind researchers generated test cases using a language model and then used a classifier to detect various harmful behaviours on test cases.