Archives for XGBoost

“What we've seen with generative AI is the ability for it to seemingly reason about test scenarios that that could be interesting but may have been overlooked,” said Rangarajan Vasudevan, CDO of Lentra

The post AI-Powered Innovation: Lentra’s Role in Shaping the Future of Indian Banking appeared first on Analytics India Magazine.

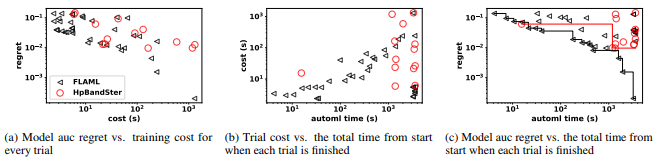

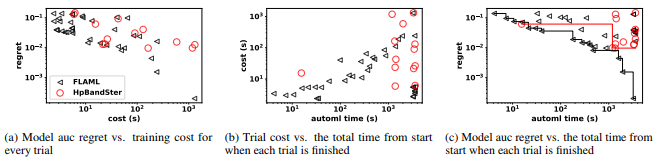

FLAML is an open-source automated python machine learning library that leverages the structure of the search space in search tree algorithmic problems and is designed to perform efficiently and robustly without relying on meta-learning, unlike traditional Machine Learning algorithms. To choose a search order optimized for both cost and error and it iteratively decides the learner, hyperparameter, sample size and resampling strategy while leveraging their compound impact on both cost and error of the model as the search proceeds.

The post Microsoft FLAML VS Traditional ML Algorithms: A Practical Comparison appeared first on Analytics India Magazine.

Between October and December 2016, Kaggle organised a competition with over 3,000 participants, competing to predict the loss value associated with American insurance company Allstate. In 2017, Google scholar Alexy Noskov won second position in the competition. In a blog post on Kaggle, Noskov walked readers through his work. The primary models he employed were…

The post Story of Gradient Boosting: How It Evolved Over Years appeared first on Analytics India Magazine.

When asked about his approach to data science problems, Sergey Yurgenson, the Director of data science at DataRobot, said he would begin by creating a benchmark model using Random Forests or XGBoost with minimal feature engineering. A neurobiologist (Harvard) by training, Sergey and his peers on Kaggle have used XGBoost(extreme gradient boosting), a gradient boosting…

The post Deep Learning, XGBoost, Or Both: What Works Best For Tabular Data? appeared first on Analytics India Magazine.

Ensemble Learning is the process of gathering more than one machine learning model in a mathematical way to obtain better performance.

The post Comprehensive Guide To Ensemble Methods For Data Scientists appeared first on Analytics India Magazine.

PyTerrier framework proposes different pipelines as Python Classes to build an end-to-end, scalable Information Retrieval system

The post Guide to PyTerrier: A Python Framework for Information Retrieval appeared first on Analytics India Magazine.

In this article, I’ll be discussing how XGBoost works internally to make decision trees and deduce predictions.

The post Understanding XGBoost Algorithm In Detail appeared first on Analytics India Magazine.

In recent times, ensemble techniques have become popular among data scientists and enthusiasts. Until now Random Forest and Gradient Boosting algorithms were winning the data science competitions and hackathons, over the period of the last few years XGBoost has been performing better than other algorithms on problems involving structured data. Apart from its performance, XGBoost is also recognized for its speed, accuracy and scale. XGBoost is developed on the framework of Gradient Boosting.

The post Complete Guide To XGBoost With Implementation In R appeared first on Analytics India Magazine.