Archives for attention mechanism

Adding Attention layer in any LSTM or Bi-LSTM can improve the performance of the model and also helps in making prediction in a accurate sequence. very helpful in NLP modeling with big data

The post Hands-On Guide to Bi-LSTM With Attention appeared first on Analytics India Magazine.

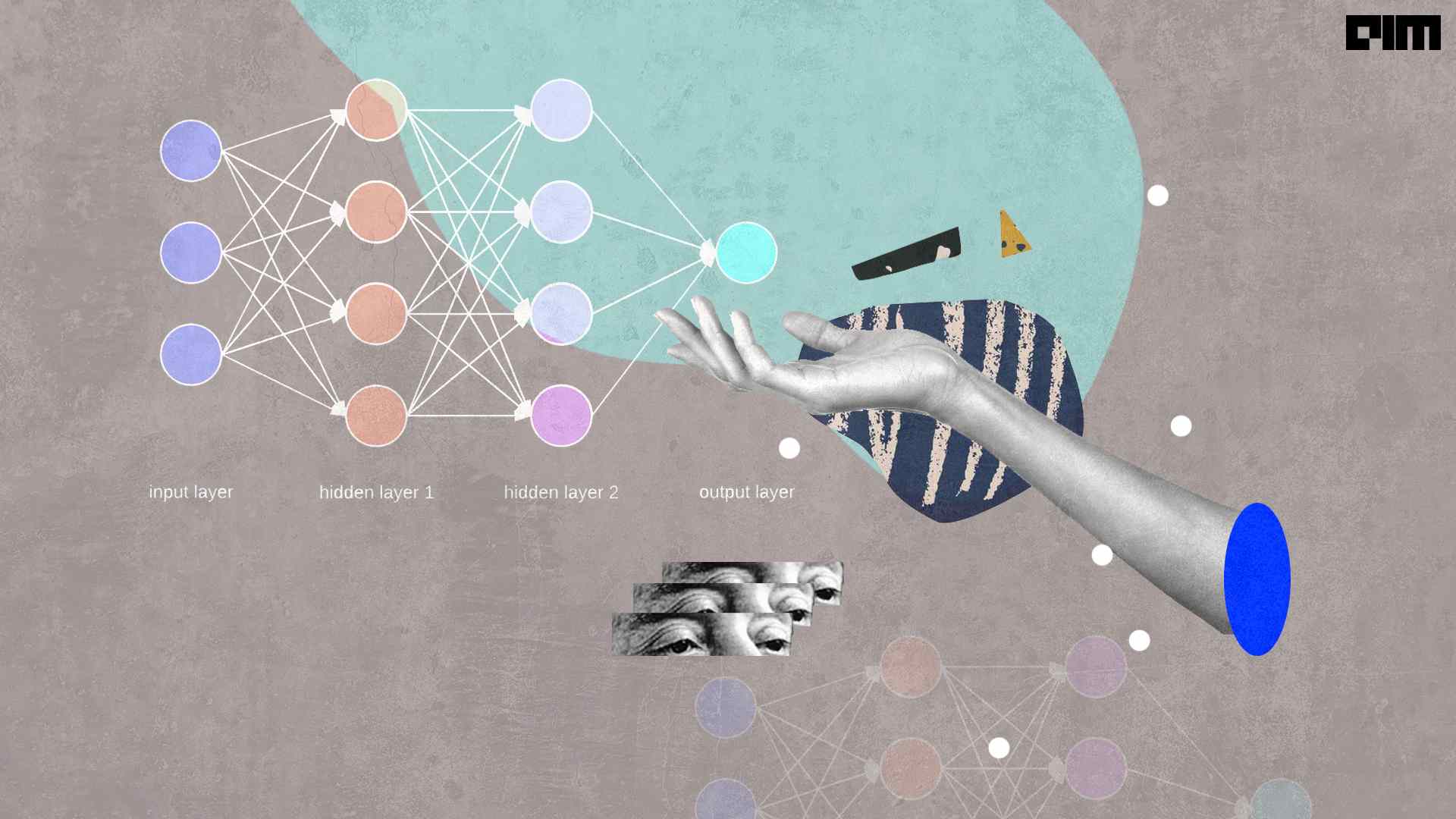

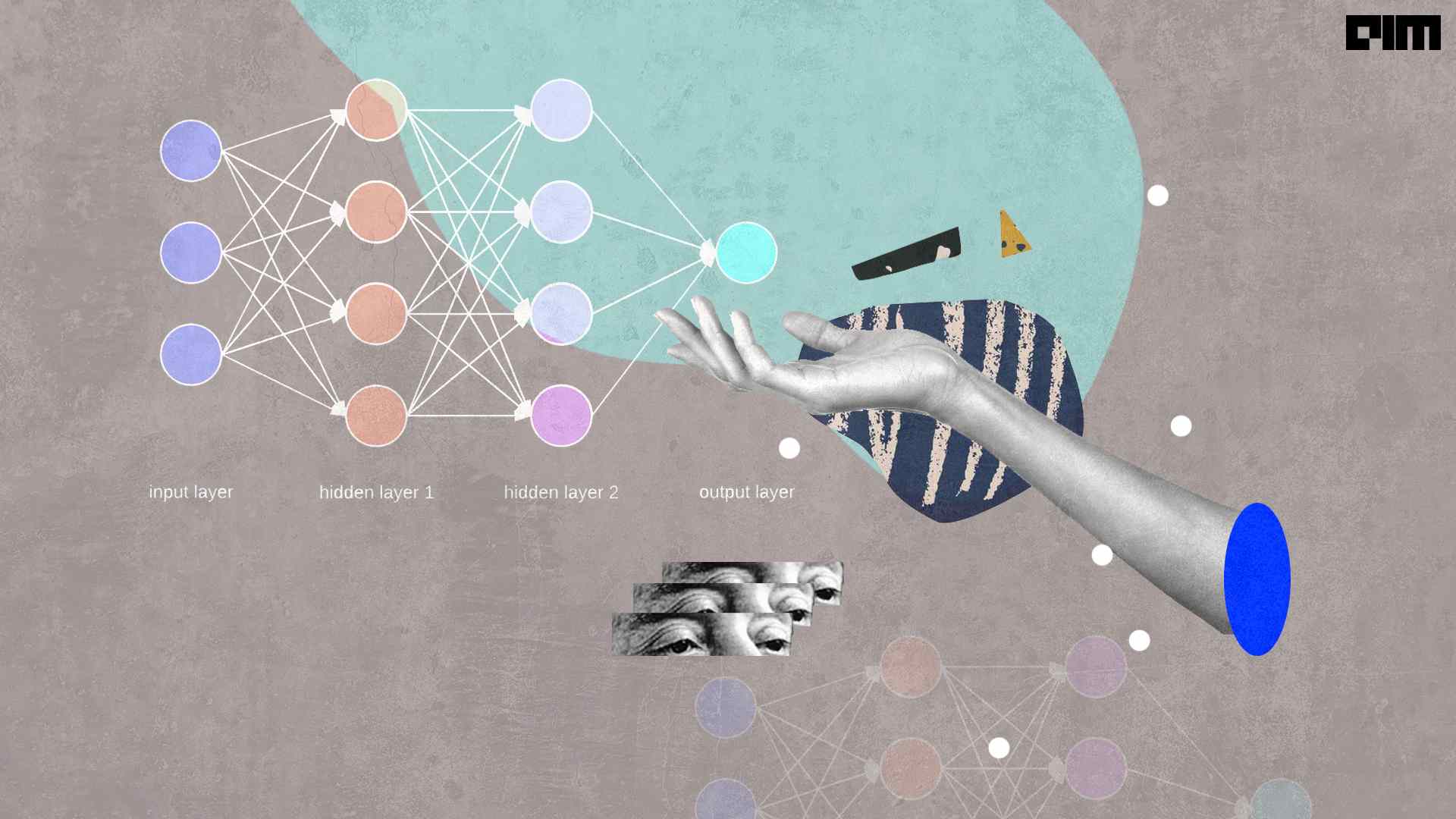

Before 2015 when the first attention model was proposed, machine translation was based on the simple encoder-decoder model, a stack of RNN and LSTM layers. The encoder is used to process the entire sequence of input data into a context vector. This is expected to be a good summary of input data. The final stage of the encoder is the initial stage of the decoder.

The post Hands-on Guide to Effective Image Captioning Using Attention Mechanism appeared first on Analytics India Magazine.

Perceiver is a transformer-based model that uses both cross attention and self-attention layers to generate representations of multimodal data. A latent array is used to extract information from the input byte array using top-down or feedback processing

The post Guide to Perceiver: A Scalable Transformer-based Model appeared first on Analytics India Magazine.

Perceiver is a transformer-based model that uses both cross attention and self-attention layers to generate representations of multimodal data. A latent array is used to extract information from the input byte array using top-down or feedback processing

The post Guide to Perceiver: A Scalable Transformer-based Model appeared first on Analytics India Magazine.

DETR(Detection Transformer) is an end to end object detection model that does object classification and localization i.e boundary box detection. It is a simple encoder-decoderTransformer with a novel loss function that allows us to formulate the complex object detection problem as a set prediction problem.

The post How To Detect Objects With Detection Transformers? appeared first on Analytics India Magazine.

DeLighT is a deep and light-weight transformer that distributes parameters efficiently among transformer blocks and layers

The post Complete Guide to DeLighT: Deep and Light-weight Transformer appeared first on Analytics India Magazine.

MultiSpeaker Text to Speech synthesis refers to a system with the ability to generate speech in different users’ voices. Collecting data and training on it for each user can be a hassle with traditional TTS approaches.

The post Guide to Real-time Voice Cloning: Neural Network System for Text-to-Speech Synthesis appeared first on Analytics India Magazine.

MultiSpeaker Text to Speech synthesis refers to a system with the ability to generate speech in different users’ voices. Collecting data and training on it for each user can be a hassle with traditional TTS approaches.

The post Guide to Real-time Voice Cloning: Neural Network System for Text-to-Speech Synthesis appeared first on Analytics India Magazine.