Archives for vanishing gradient

Yann Lecun and team introduce an efficient method for training deep networks with unitary matrices

Long Short Term Memory in short LSTM is a special kind of RNN capable of learning long term sequences. They were introduced by Schmidhuber and Hochreiter in 1997. It is explicitly designed to avoid long term dependency problems. Remembering the long sequences for a long period of time is its way of working.

The post LSTM Vs GRU in Recurrent Neural Network: A Comparative Study appeared first on Analytics India Magazine.

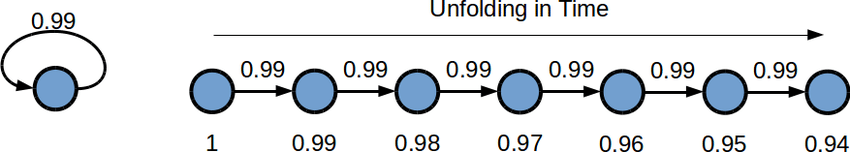

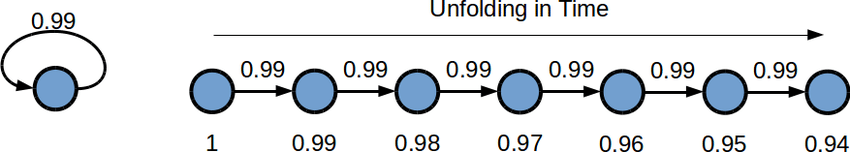

The traditional feed-forward neural networks are not good with time-series data and other sequences or sequential data. This data can be something as volatile as stock prices or a continuous video stream from an on-board camera of an autonomous car. Handling time series data is where RNNs excel. They were designed to grasp the information…

The post What Are The Challenges Of Training Recurrent Neural Networks? appeared first on Analytics India Magazine.